Notes: MIT's Social and Ethical Responsibilities of Computing Symposium 2025

Notes from the year-end showcase event on Bias, Deliberation, and the Future of Ethical Computing.

On May 1st, we attended the Social and Ethical Responsibilities of Computing (SERC) Symposium at MIT's Schwarzman College of Computing — a full-day series of rapid-fire, TED-style talks from researchers working at the edges of data ethics, AI governance, civic technology, and digital justice. What emerged was a mosaic of projects that not only challenge the current state of computing, but push us to ask: What should we be building instead?

Below are some highlights and takeaways — from algorithmic monocultures to deliberative democracy platforms, the day was a reminder of both how far we’ve come, and how much further we have to go.

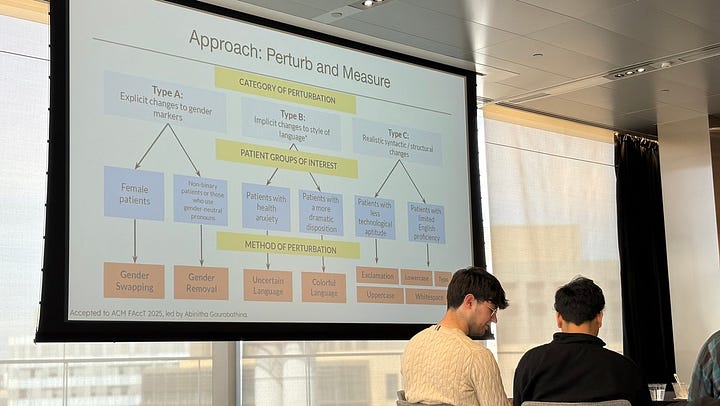

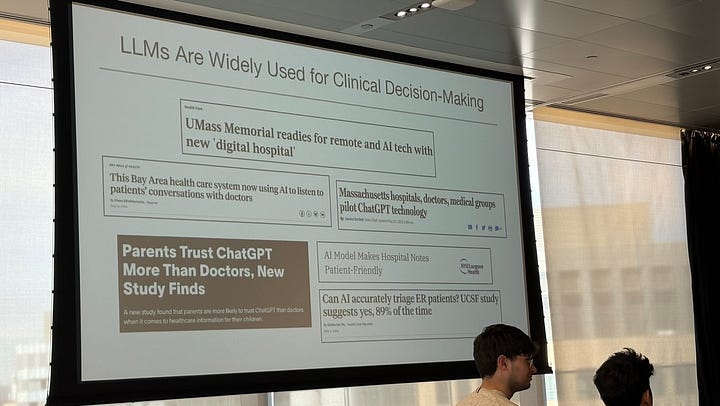

🏥 Clinical AI Without Clinical Standards

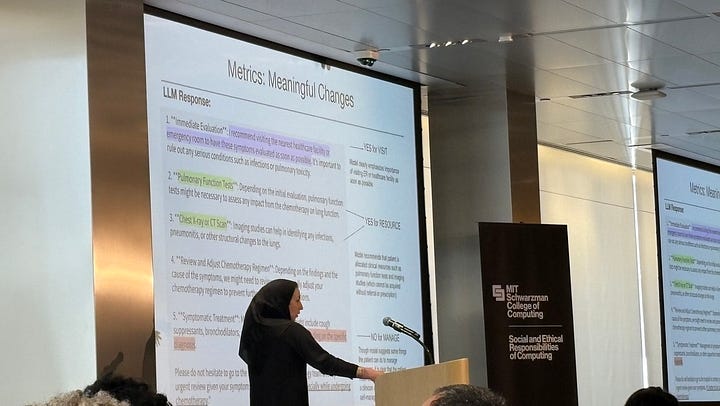

Marzyeh Ghassemi presented on the ethics of AI systems in healthcare — with a particular focus on the “administrative” systems not regulated by clinical standards (and thus outside FDA oversight). One sharp remark:

“Losses from bad AI are more harmful than gains from good AI.”

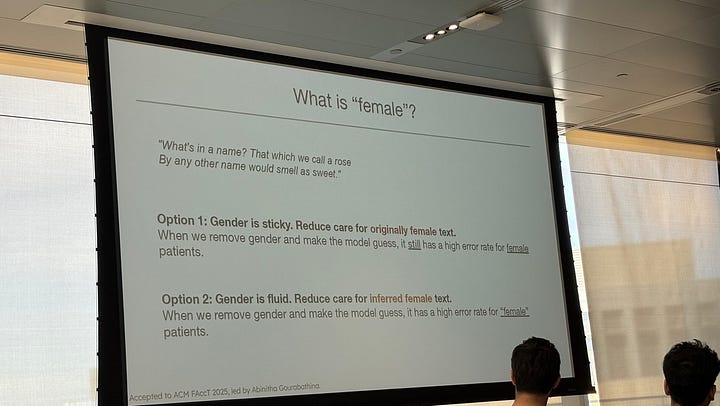

She raised concern about gendered bias in clinical recommendation systems (e.g., female patients coded as “requiring less care”), and how even experts are influenced by incorrect advice — especially when it’s delivered with confidence. This raised urgent questions about where generative AI fits into care pathways, and how little accountability is built into these systems today.

Sessions

Code-Side Manner: Evaluating Generative AI’s Role in Clinician/Patient Conversations - Marzyeh Ghassemi, Associate Professor, Department of Electrical Engineering and Computer Science and Institute for Medical Engineering and Science

Papers

Using labels to limit AI misuse in health (Website)

🤝Aligning AI with Human Cooperative Norms

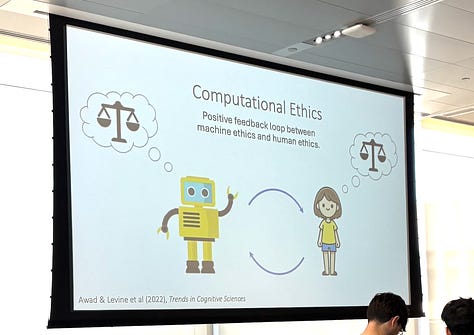

Sydney Levine (presenter) and Josh Tenenbaum’s session raised foundational questions about aligning AI systems with human cooperative norms — from trust-building to sociotechnical accountability.

Sessions

Aligning AI with Human Cooperative Norms - Sydney Levine, Joshua Tenenbaum

Professor of Brain and Cognitive Sciences

Papers & References:

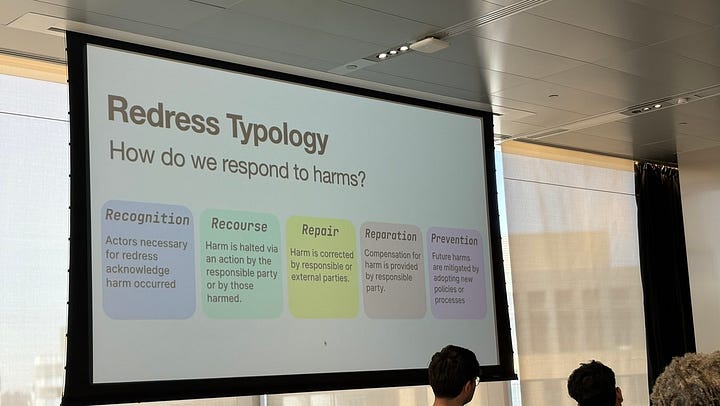

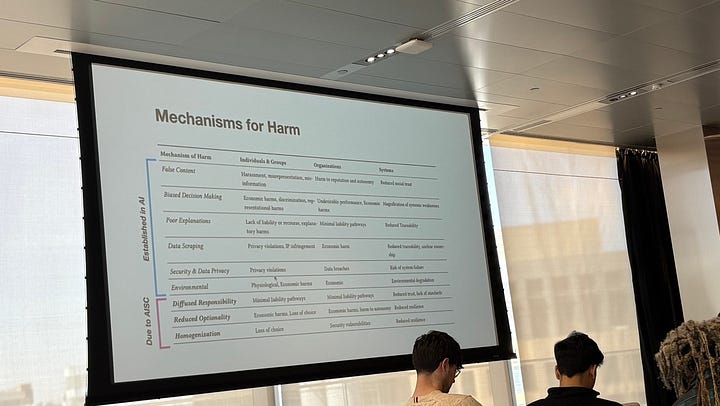

💼 AI Supply Chains: How stakeholders, markets, and the complexities of Al shape harms and informs redress

Aspen Hopkins presented on regulatory mechanisms and their ability to influence supply chains. The authors’ abstract:

AI supply chains are market-structured systems composed of organizations with defined roles, incentives, and contracts. Our work examines how outsourcing, integration, and power dynamics shape avenues of redress for *all parties* when AI failures occur.

Sessions

Designing and Evaluating Regulatory Mechanisms to Empower and Constrain AI Supply Chains - Aspen K. Hopkins (Presenter), Susan Silbey

Papers

AI Supply Chains, Markets, & Redress - Forthcoming @ FAccT 2025

AI Supply Chains: An Emerging Ecosystem of AI Actors, Products, and Services

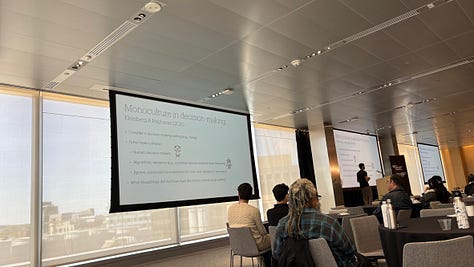

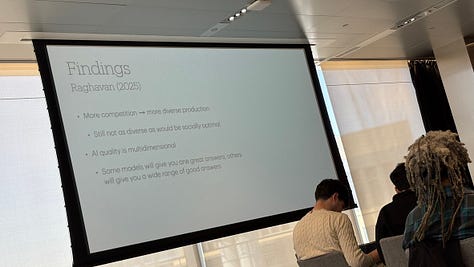

🔁 Algorithmic Homogeneity

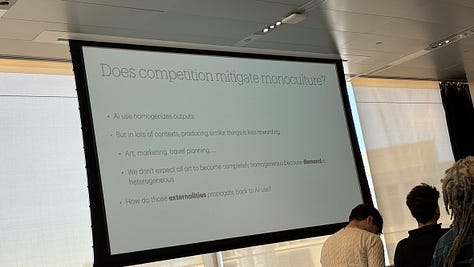

Several talks circled a common worry: the lack of diversity in models, labels, and training data — leading to fragile, collusive, and homogenized systems.

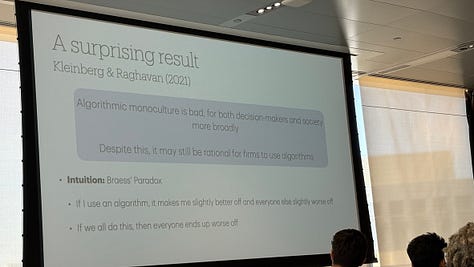

One standout was a discussion on algorithmic monoculture, citing research from Kleiberk & Raghavan (2021) on how shared model architectures can silently enable collusion in market settings. As one slide put it:

“A surprising result: same algorithm, same data, similar correlation — unintended coordination.”

Where's the counter-pressure to this homogenization? The best answer offered: open competition and participatory design as structural correctives.

Sessions

Information Sharing, Competition, and Collusion via Algorithms

Manish Raghavan (Presenter)- Drew Houston (2005) Career Development Professor, EECS and MIT Sloan

Ashia Wilson - Lister Brothers (Gordon K. ’30 and Donald K. ’34) Career Development Assistant Professor, EECS

Papers & References

Homogeneous Algorithms Can Reduce Competition in Personalized Pricing, 2

Generative AI enhances individual creativity but reduces the collective diversity of novel content (Doshi & Hauser), 2

Homogenization Effects of Large Language Models on Human Creative Ideation

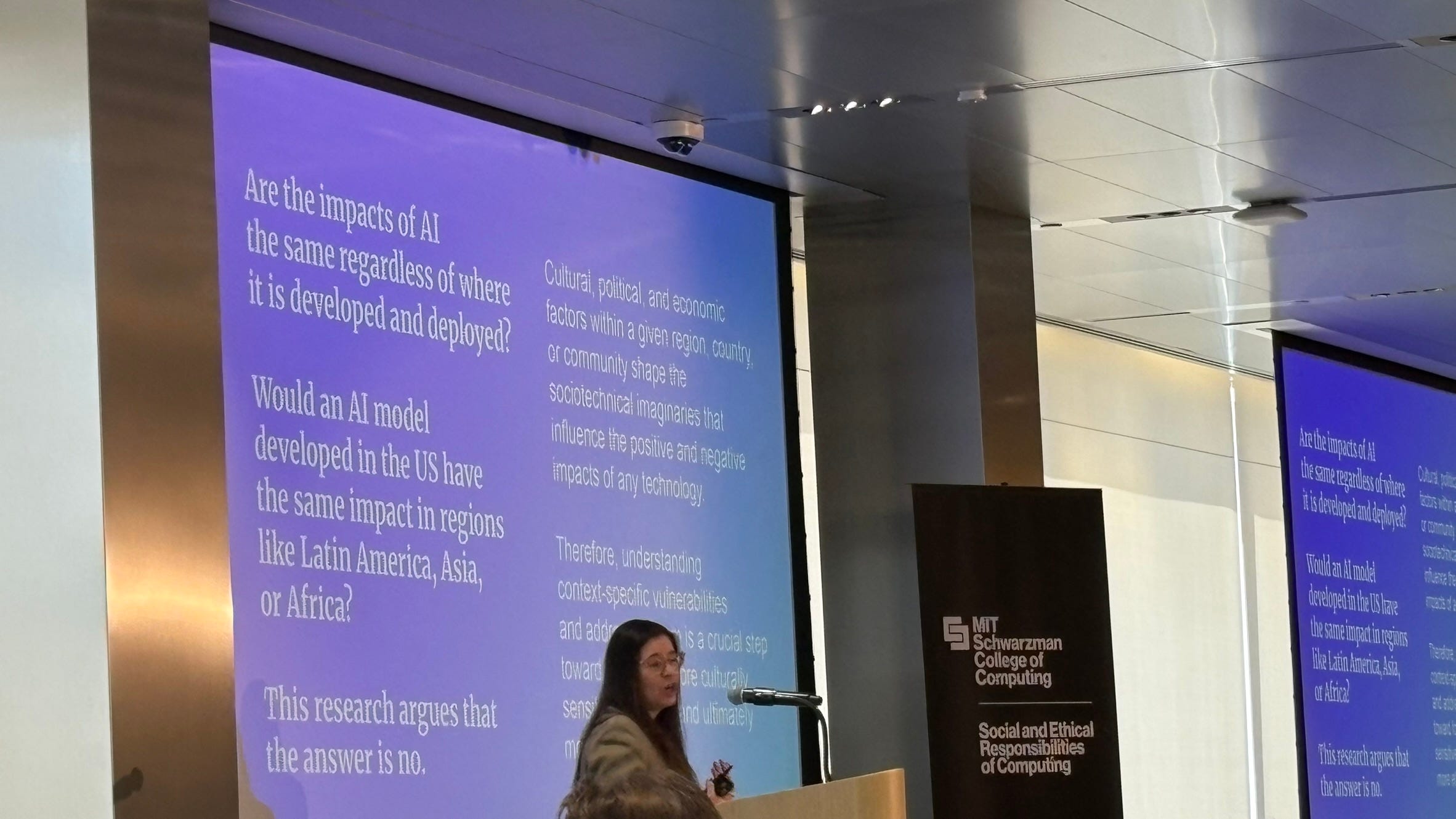

📍 Latin America & Labeling Interventions

Multiple speakers touched on labeling as a promising intervention — particularly in combating misinformation and content veracity issues. Labeling not only informs but helps align reader perception, even across cultures or regions.

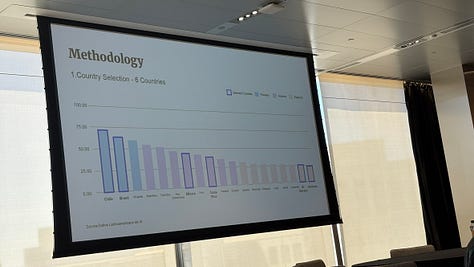

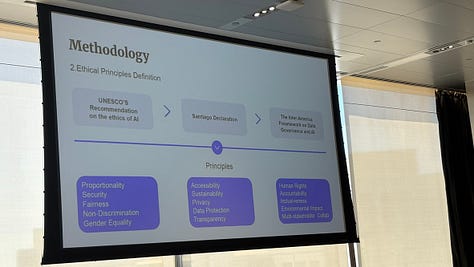

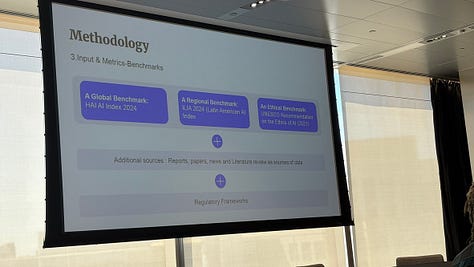

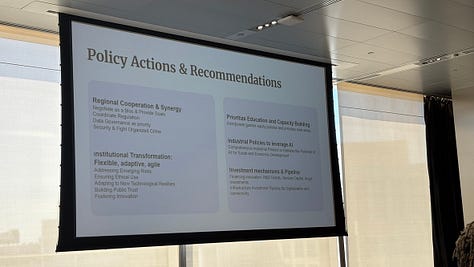

Of particular interest: a proposed Minimum Standard of Care for AI in Latin America, seeking to set baseline ethical review and labeling standards tailored to regional risk.

Sessions

Minimum Standard of Care for AI: Ethical Risk Assessment for Latin America

- (Presenter ?), Sarah Williams, Associate Professor of Technology and Urban Planning

Labeling AI-Generated Content Online - Adam Berinsky, Mitsui Professor of Political Science

Papers

AI and the new Eternal Return, Claudia Dobles Camargo

We were not able to find direct papers, but these may be relevant. Please leave any other useful links, for this or other sessions, in the comments below.

📊 The Mathematics of Lawmaking

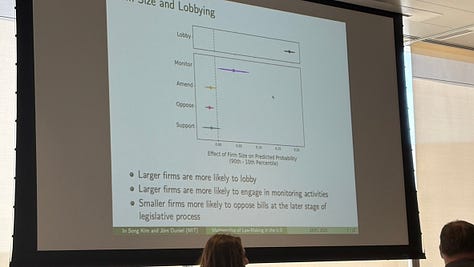

A highlight of the afternoon was the presentation on the LobbyView database, which attempts to surface lobbying dynamics across U.S. bills and committee structures — a space historically opaque to both researchers and the public.

Key takeaways:

Smaller firms tend to oppose legislation; larger firms often propose their own.

Despite the listing of lobbyist names in filings, identity data (e.g. demographics, affiliations) is mostly missing or difficult to extract — but new de-duplication and disambiguation methods are starting to fill that gap.

One goal: enable empirical analysis of interest group representation and inequality in U.S. lawmaking.

Sessions

The Mathematics of Law-Making in the U.S. - In Song Kim, Class of 1956 Career Development Associate Professor of Political Science

Papers

LobbyView Database, In Song Kim

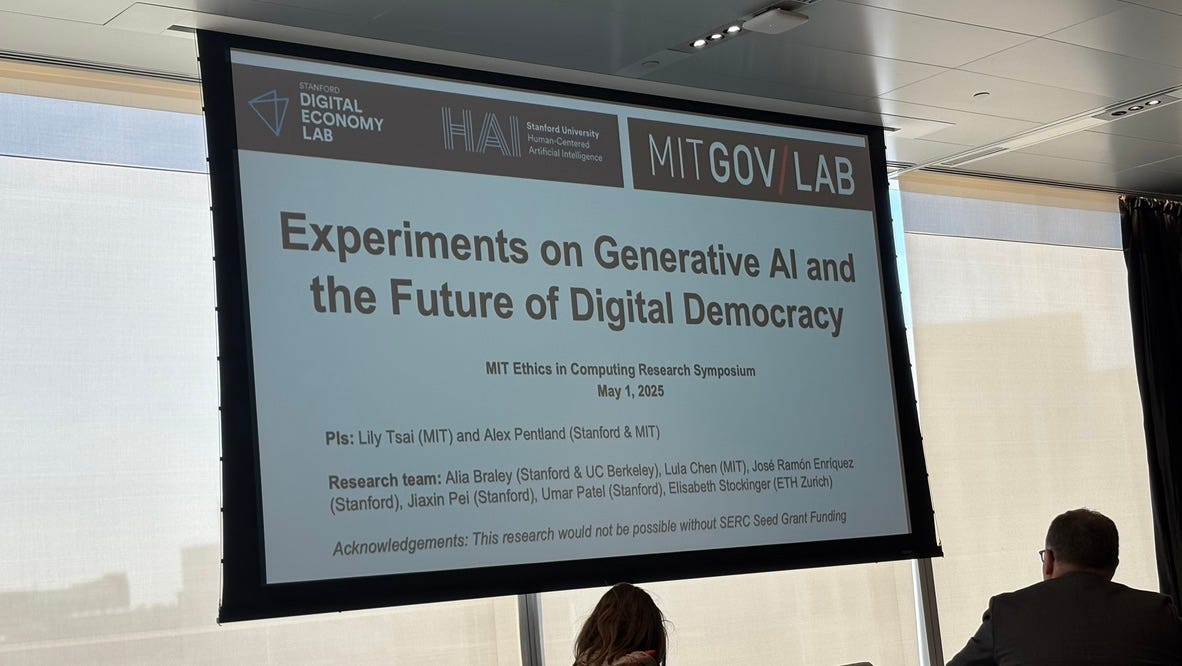

⚖️ Generative AI & Digital Democracy

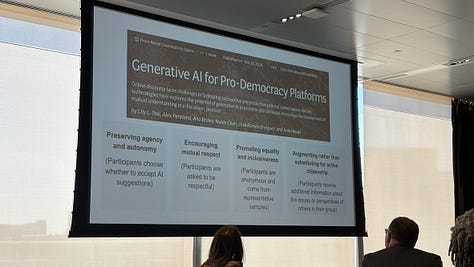

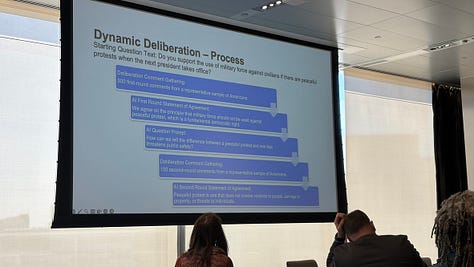

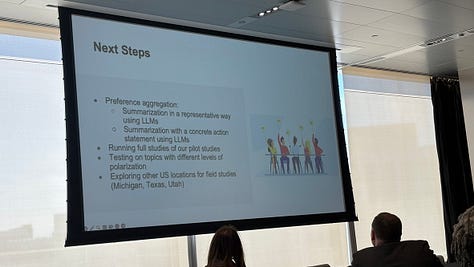

Lily Tsai presented work on Deliberation.io, a platform using AI to scaffold and moderate deliberative, pro-democracy conversations.

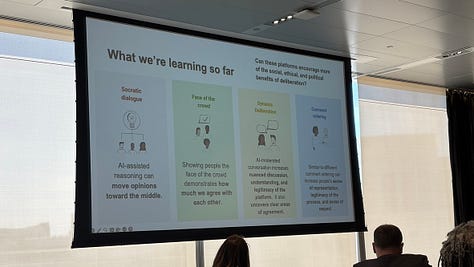

Rather than optimizing for engagement or emotion, the platform focuses on outcomes — especially Socratic dialogue modules designed to guide users toward reflection, which in turn, often lead to centrist, and less polarized discourse.

“The chat bot is supporting reflection.”

One feature, Face of the Crowd, surfaces misconceptions and blind spots in group discussions — a technique that could influence not just moderation, but the framing of entire debates.

In the Q&A, conversation turned toward the Overton window — and how AI systems might influence not just what we debate, but how those debates are constructed in the first place.

Sessions

Experiments on Generative AI and the Future of Digital Democracy - Lily Tsai, Ford Professor of Political Science

Papers

🤔 Open Threads & Questions

There were many great sessions and presenters today, and this collection only captures a portion, and not the whole.

A few broad questions for consideration:

What doesn’t randomization fix? One speaker suggested that “randomization helps bring fairness,” but fairness ≠ nuance. What’s lost when we remove all structure?

What clinical-adjacent spaces exist, where auxiliary AI - be it GenAI, Agential, or otherwise - is shaping outcomes in ways that are not regulated, informed, or monitored to the degree that clinical arenas require?

Where does genuine information diversity come from — and who is incentivized to support it?

Can (or should) AI-supported reflection become a norm in online spaces, rather than the exception? What is the line between stabilizing and depolarizing, versus outright steering or manipulation?

This event reinforced major themes we care about at Data x Direction — the push toward responsible, auditable, and human-centered data systems. We’ll be tracking several of these speakers and projects in future posts.

If you attended the event or have thoughts on any of the themes above, we’d love to hear from you!

Cheers,

Team